Webinar Series by ISPRS WG III/9

Environmental & Health Concerns: Geospatial Technology

ISPRS WG III/9 (Geospatial Environment and Health Analytics) Webinar

Speaker: Dr. Pinliang Dong

Topic: LiDAR Applications in Urban Environmental Studies

Date/Time: February 25, 2026, 2 PM, GMT (Greenwich Mean Time)

» Read more details

Early Registration Discount– Only 2 Weeks Left!

Join the global geospatial community at the XXV ISPRS Congress 2026

📍 Toronto, Canada | 🗓 4–11 July 2026

Register now and enjoy early registration discounted fees!

Why register early?

• Save with special early registration pricing

• Plan ahead, get your visa, and book your conference travel

• Access a world-class program: plenaries, technical sessions, workshops & industry talks

• Expand your international network of researchers, professionals & decision-makers

📌 Two weeks. One decision. Countless opportunities.

👉 Register now and save: https://isprs2026.org/puzf

The 2026 International Student Competition on Digital Architectural Design

Klang, Malaysia| Final Presentation & Award Ceremony: July 2026| Work Exhibition: November 2026

The 2026 International Student Competition on Digital Architectural Design invites undergraduate and graduate students worldwide to explore innovative digital and architectural solutions for urban regeneration in Bandar Baru Klang.

Theme: Think Beyond the City — Reimagine Klang’s Future

• Open to undergraduate and graduate students worldwide

• Teams of up to 6 students with up to 2 supervisors

• Awards up to USD 8,000

• Top 10 teams will be invited to on-site final presentations in Malaysia

• Registration deadline: 15 March 2026

» Read more details

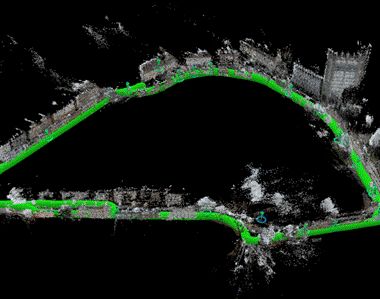

A New Dataset for Geospatial Visual Localisation: egenioussBench

Determining a camera’s pose from images – known as visual localisation- is fundamental to applications from autonomous driving and robotics to augmented reality, yet existing datasets face two key issues. They either lack the scale needed for large-scale scenes, limiting progress towards truly scalable methods. Second, when they do cover large scenes, they often provide imprecise ground truth poses for the query image data. egenioussBench overcomes these limitations by pairing a high-resolution aerial 3D mesh and a CityGML LoD2 model as geospatial referee data and a map-independent ground-level smartphone imagery with centimetre-accurate poses obtained via PPK and GCP/CP-aided adjustment as query data.

Read more »